OpenAI has made a major announcement with the release of GPT-4, the latest update to the language model that powers ChatGPT. This powerful model is multimodal, accepting both text and image inputs and generating text outputs. While not quite on a par with human capabilities in real-world scenarios, GPT-4 is capable of exhibiting human-level performance on various professional and academic benchmarks.

Capabilities

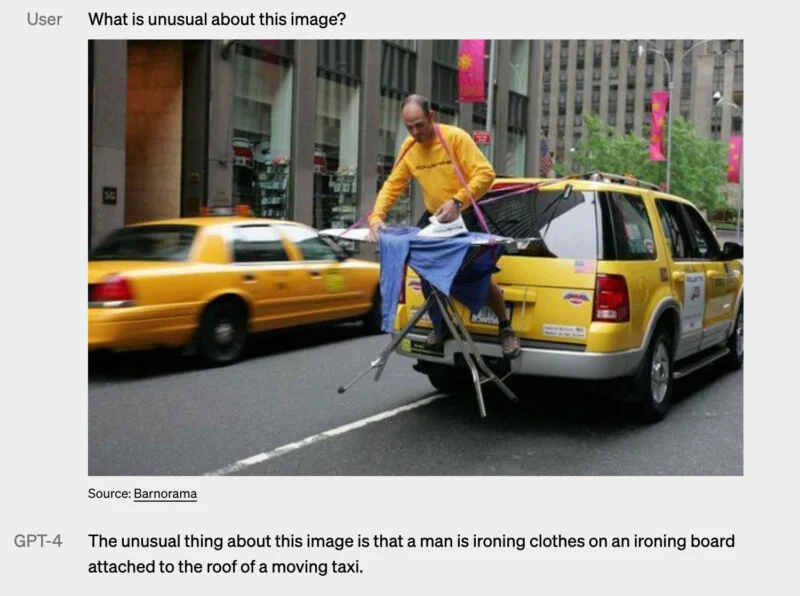

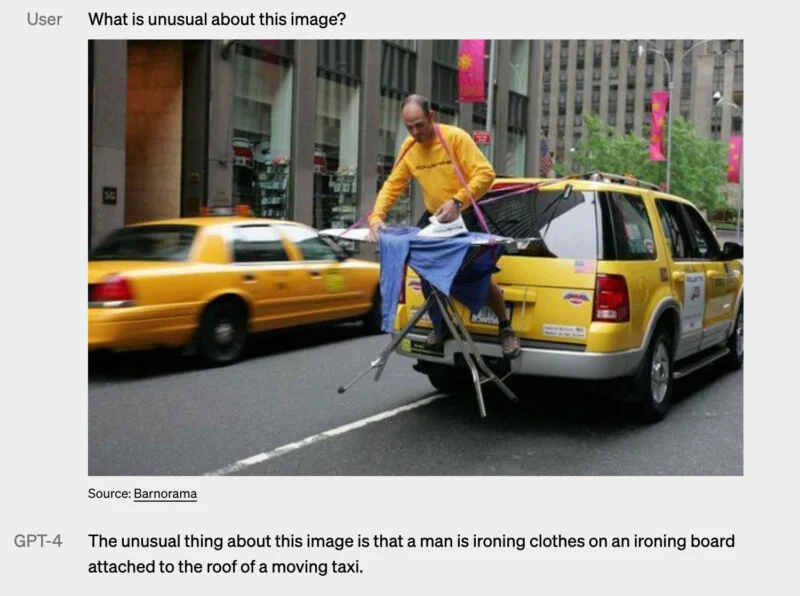

Compared to its predecessor, GPT-3.5, GPT-4 is much more reliable, creative, and capable of handling more nuanced instructions. It can accept prompts comprising text and images, allowing users to specify task across a range of domains. These now include documents with text and photographs, diagrams, or screenshots.

An example is this prompt:

OpenAI tested the model on a variety of benchmarks, including simulated exams designed for humans. GPT-4 excelled in these tests, scoring around the top 10% of test takers on a simulated bar exam. In comparison, GPT-3.5 scored around the bottom 10%.

The model’s improvements are evident in its performance on various tests and benchmarks, such as the Uniform Bar Exam, LSAT, SAT Math, and SAT exams, where GPT-4 scored in the 88th percentile and above.

Another significant difference between GPT-4 and its predecessors is that developers and (eventually) ChatGPT users can now prescribe the AI’s style and task by describing those directions in the “system” message. You can converse with the chatbot until it “understands” what you truly mean, or learn your style of writing. Check the examples on the research done here.

Limitations

However, despite its impressive capabilities, GPT-4 still has limitations similar to earlier GPT models. It is not fully reliable and can “hallucinate” facts and make reasoning errors. Still, GPT-4 significantly reduces these errors compared to previous models.

Another limitation of GPT-4 is its lack of knowledge of events that have occurred after September 2021, the cutoff date for its data. It also does not learn from its experience and can sometimes make simple reasoning errors or accept obvious false statements from users. Further, like humans, GPT-4 can fail at hard problems, such as introducing security vulnerabilities into the code it produces.

Availability

GPT-4 is available on ChatGPT Plus and as an API for developers to build applications and services. ChatGPT Plus is OpenAI’s $20 monthly ChatGPT subscription. OpenAI may introduce a new subscription level for high-volume usage, depending on traffic. Eventually, they hope to offer some amount of free queries so those without a subscription can try it too.

Comparing GPT-4 with other large language models could be highly insightful. Chat GPT Español might even create a chart highlighting their key differences, such as parameters, strengths, and potential applications.